Introduction

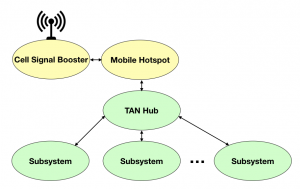

A friend of mine, Scott Lemon founder and CTO of Wovyn, and I were discussing my travel trailer API and he coined the term, trailer area network or TAN. My TAN is going to be based on WiFi because of the ubiquitous availability of WiFi connected devices including microcontrollers, commercially available connected devices, and mobile phones and tablets. To this network I’ll attach microcontrollers that control trailer subsystems. These subsystems will connect via WiFi to a trailer hub that will expose subsystem functionality through the travel trailer API, see Figure below.

In this figure Internet connectivity is acquired through a mobile hotspot with the help of a cell signal booster. The mobile hotspot allows 15 devices to connect to it. One of the 15 devices will be the TAN Hub. The TAN Hub will create a subnet that subsystems will attach to, there could be many. Communications between the subsystems and the TAN Hub may use various protocols, but the TAN Hub will expose their functionality through a REST based API.

Components

There are three main sets of components that are needed to create the described TAN. For Internet connectivity we need a signal booster and a mobile hotspot. We need a server of sorts to act as the TAN Hub and a WiFi connected microcontroller unit to use in the construction of subsystems.

Internet Connectivity

I have selected the AT&T Unite Explore, also known as the Netgear AC815S, device as my mobile hotspot device. It seems to get good reviews and is reasonably priced. I may experiment with other hotspots in the future.

I acquired a weBoot Drive 4G-M Cell Phone Signal Booster to facilitate the acquisition of cell signals in remote locations. In the future I may experiment with various antenna configurations to increase the usable range.

Trailer Area Network Hub

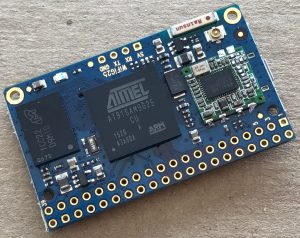

For the TAN Hub I will use the Corewind  WiFiG25 microcontroller module described in my original post describing my trailer API. This module is based on a 400 MHz ARM processor, has ample memory, and built in WiFi and runs the Linux operating system.

WiFiG25 microcontroller module described in my original post describing my trailer API. This module is based on a 400 MHz ARM processor, has ample memory, and built in WiFi and runs the Linux operating system.

I expect to add an API management system to this module and communication systems to enable it to connect to and communicate with trailer subsystems.

Subsystem Controllers

There are many potential trailer subsystems that can be exposed and interacted with through an API. Examples include: leveling jacks, stabilizers, tank levels, battery levels, solar energy generation, awning, pop-outs, security, weather data, etc. Each of these will inevitably require special sensors, actuators, etc., but I am hoping to standardize on the microcontroller modules used to control the subsystems and communicate to the TAN Hub.

I will be experimenting with the ESP 12-F module based on the ESP8266 chip. This is an impressive module featuring a 32 bit MCU, integrated WiFi, tcp/ip stack, 4 MBytes of Flash memory for user programs, and all this for $1.74. It is capable of communicating with other hardware using SPI, I2C, Serial and other low level communication protocols.

These communication channels, as well as the available GPIO lines, will be used to interface these modules to subsystem specialty hardware such as switches, sensors, etc. The integrated WiFi will be used to connect these subsystems to the TAN Hub.

Summary

Future posts will describe various details related to the programming, configuration, and use of the components discussed in this post. There is much to learn, much to do, and a lot of fun to be had.